HTCondor Annex - Scalability and Stability

By Fabio Andrijauskas - UCSD

One challenge for High-throughput computing (HTC) is integrating with the computational cloud or other clusters to provide more computational power. HTCondor Annex can create working nodes on clusters using schedulers such as HTCondor, Slurm, and others.

Executive summary

The HTCondor Annex creates a way to provide more computational power using the same structure as a standard HTCondor environment. About the HTCondor Annex and the test environment:

- All the tests were in the ap40.uw.osg-htc.org and ap1.facility.path-cc.io as an HTCondor Annex target.

- The methodology was based on the absolute number of worker daemons, the absolute number of worker daemons, peaks execute daemon termination, the external killing of executed daemons when the system is loaded, and the long-term stability of an extensive system.

- We ran jobs for three months, more than 250k jobs, and more than 100k Annex.

- The HTCondor Annex creation process frequency could be increased; the results show the Annex creation frequency decreases while the number of annexes increases.

- The annex can only be created if you create fewer jobs (50k jobs). The appendix shows the output of the attempt to create the Annex after the job creation.

- Integrating clusters besides the one already coded on the HTCondor Annex is impossible.

- The job removal doesn’t always work to remove the HTCondor Annexes from the target host. SSH sessions toward the target are also sometimes left behind.

Recommendations

The recommendations are ordered related to the user impact.

- Improve the checks when an Annex or job is interrupted; there are cases where the jobs stay on target.

- Fix orphaned SSH connections toward target after the Annexes have terminated.

- Create multiple jobs in the same scripts (“queue X”) using HTCondor Annex.

- Show the options for shared connection before the Annex starts the process. This could be very useful to prevent problems with the 2-step or CILogon authentication style. Figure 4 shows the output of a command to create an Annex and the CILogon authentication request.

- It is required to create all the jobs first and the Annexes afterward. It is interesting to be able to create the annex and the jobs or vice versa.

- Integrate or warn about the fact that condor_store_cred needs to start the Annexes.

- Adding other targets using configuration files in the HTCondor Annex is essential; now, it is only possible to use a “coded set” of targets.

- Give feedback about the SSH timeout command; it helps debug firewall issues.

- Consider an alternative for opening port 9618 for security reasons and administration issues.

- Create a command to check the status of the target jobs.

Methodology

The methodology applied to test the HTCondor Annex has a few goals to cover scalability, stability, and performance:

-

Absolute number of worker daemons

- Peaks execute daemon provisioning to show that we can start many execute daemons within a short amount of time and have jobs start on them.

- Peaks execute daemon termination.

- After having many execute daemons running, set up either job termination or Annex auto-termination to happen as closely as possible together.

- The external killing of executed daemons when the system is loaded (e.g., over 20k

already)

- Measure the success/error rates of the daemons de-registering from the negotiator, clean up after themselves, and adequately reflect termination in Annex commands.

- Long-term stability of an extensive system

- After bringing the system to a large baseline (e.g., 20k), check if it is possible to sustain that.

Tests HTCondor Annex

Table 1 shows the tests run using the HTCondor Annex, using the ap40.uw.osg- htc.org (HTCondor Annex) and ap1.facility.path-cc.io (worker target). The results are positive, negative, and neutral. Positive results mean the tests ran without error messages or other problems; a neutral result means the test was executed successfully. Still, some aspects must be analyzed, and a negative result means the test could not run.

| Test | Results | Comments |

|---|---|---|

| Create a working node using OSG AP | Positive | It was required to run: echo | condor_store_cred add-oauth -i - -s scitokens and echo | condor_store_cred query- oauth. |

| Running job using Annex | Positive | It is possible to create the Jobs and run the Annex. |

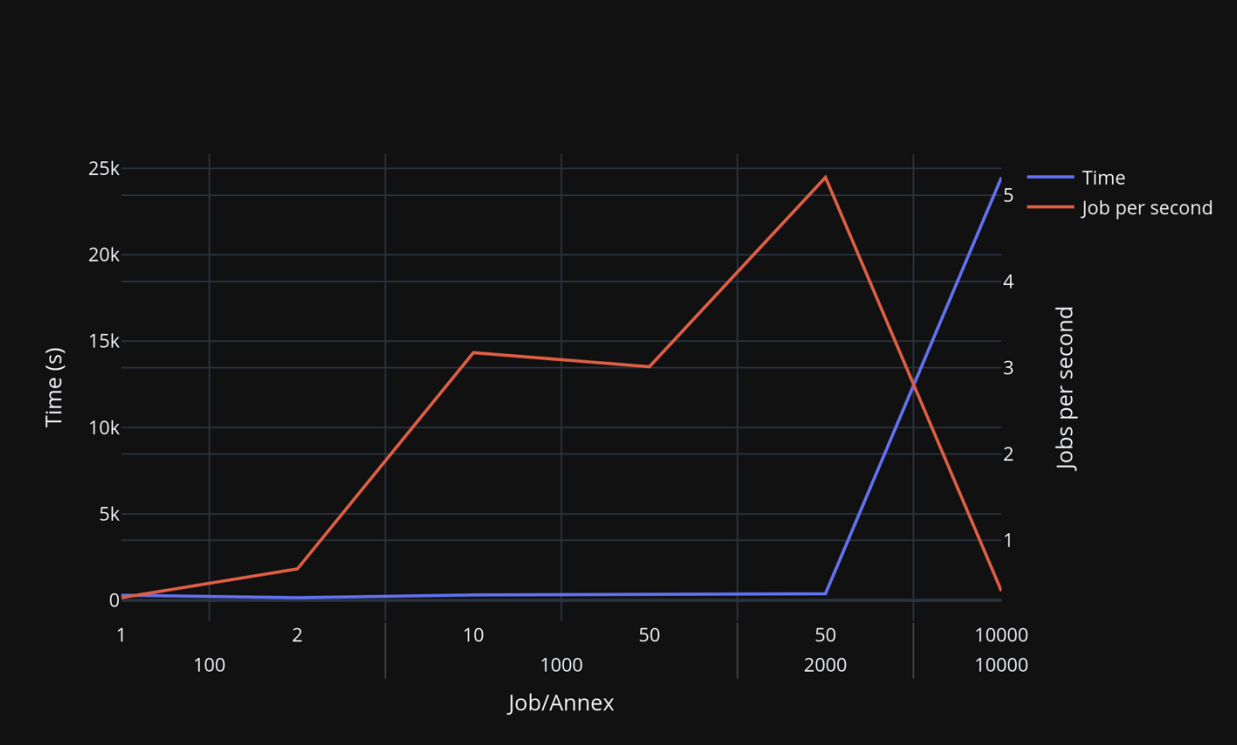

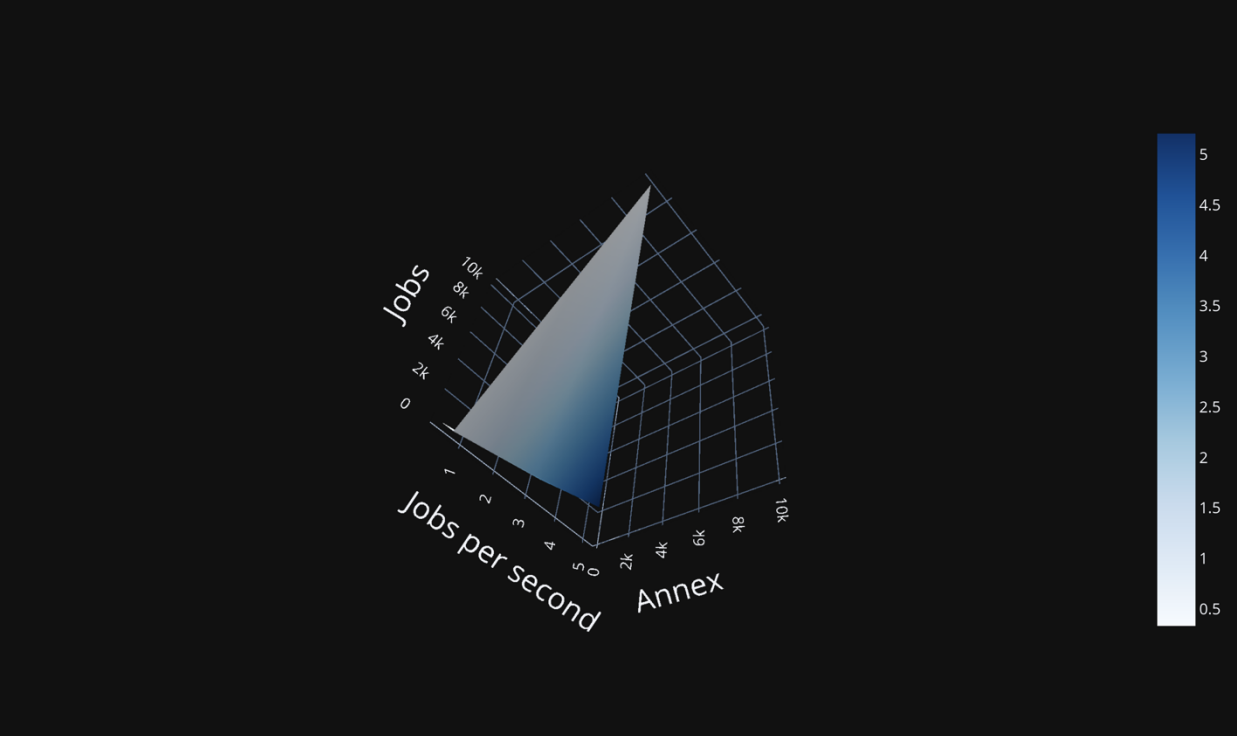

| Run jobs using X Annex and Y jobs | Neutral | Figure 1 and Figure 2 shows the results. |

| Run 50k jobs and use one Annex | Negative | It was not possible to create the Annex, the Appendices show more details about it. |

| Run 50k Jobs and ran one Annex | Negative | It was not possible to create the Annex, the Appendices show more details about it. |

| Kill an Annex while the job is running. | Positive | |

| Kill a job while an Annex is running | Neutral | Kill the annex, and sometimes it is got stuck on the target. |

| Long Run Annex – 1 month | Positive | Close to 100k jobs and 100k Annex in a one month. |

| Create jobs to run on the Annex using: queue X | Negative | It is not possible to run multiple jobs using one script. |

| Delete all Annex and all the jobs using condor_rm | Negative | There is some Annex got stuck on the target. |

Figure 1 shows tests executed using HTCondor Annex using the ap40.uw.osg-htc.org (HTCondor Annex) and ap1.facility.path-cc.io (worker target). On the left vertical axis is the time to start the job (simple job with a sleep one command), and the right vertical is the jobs executed per second; on the horizontal axis, on the first line is the number of Annex, and on the second line if the number of Jobs. Using 1 to 10 Annex, the performance of jobs per second is increasing. Adding more Annex, the job per second rate starts to decrease.

Figure 2 shows the same results as Figure 1. However, 3D visualization makes it possible to check the points where the performance starts to decrease; the bar color represents the jobs per second.

Appendix

The tests used two hosts, ap40.uw.osg-htc.org, to create jobs and Annexes, ap1.facility.pathcc.io, to run the Annexes:

- On ap40.uw.osg-htc.org:

- 502GB RAM.

- AMD EPYC 7763 64-Core Processor.

- CentOS Stream release 8.

- CondorVersion: 23.3.0 2023-12-15 BuildID: 695193 PackageID: 23.3.0-0.695193 RC - CondorPlatform: x86_64_AlmaLinux8

- ap1.facility.path-cc.io:

- 502GB RAM.

- AMD EPYC 7763 64-Core Processor

- CentOS Stream release 8

- CondorVersion: 23.3.0 2023-12-15 BuildID: 695193 PackageID: 23.3.0-0.695193 RC - CondorPlatform: x86_64_AlmaLinux8

Figure 3 shows the error when 50k jobs are created, and an attempt is made to create an Annex for those jobs.

#include <arpa/inet.h>

fabio.andrijauskas ID: 633223 12/14 15:31 _ _ 1 1

633223.0

fabio.andrijauskas ID: 633224 12/14 15:31 _ _ 1 1

633224.0

fabio.andrijauskas ID: 633225 12/14 15:31 _ _ 1 1

633225.0

fabio.andrijauskas ID: 633226 12/14 15:31 _ _ 1 1

633226.0

fabio.andrijauskas ID: 633227 12/14 15:31 _ _ 1 1

633227.0

fabio.andrijauskas ID: 633228 12/14 15:31 _ _ 1 1

633228.0

fabio.andrijauskas ID: 633229 12/14 15:31 _ _ 1 1

633229.0

fabio.andrijauskas ID: 633230 12/14 15:31 _ _ 1 1

633230.0

fabio.andrijauskas ID: 633231 12/14 15:31 _ _ 1 1

633231.0

fabio.andrijauskas ID: 633232 12/14 15:31 _ _ 1 1

633232.0

Total for query: 50000 jobs; 0 completed, 0 removed, 50000 idle, 0 running,

0 held, 0 suspended

Total for fabio.andrijauskas: 50000 jobs; 0 completed, 0 removed, 50000

idle, 0 running, 0 held, 0 suspended

Total for all users: 52321 jobs; 0 completed, 2 removed, 50028 idle, 45

running, 2246 held, 0 suspended

fabio.andrijauskas@ospool-ap2040:~/job$ htcondor job submit 1.sh --annexname path13^C

fabio.andrijauskas@ospool-ap2040:~/job$ htcondor -v annex create path12

cpu@path-facility --login-name fabio.andrijauskas --cpus 1

Attempting to run annex create with options {'annex_name': 'path12',

'queue_at_system': 'cpu@path-facility', 'nodes': 1, 'lifetime': 3600,

'allocation': None, 'owners': 'fabio.andrijauskas', 'collector':

'ap40.uw.osg-htc.org:9618?sock=ap_collector', 'token_file': None,

'password_file': PosixPath('~/.condor/annex_password_file'), 'control_path':

PosixPath('~/.hpc-annex'), 'cpus': 1, 'mem_mb': None, 'login_name':

'fabio.andrijauskas', 'login_host': None, 'startd_noclaim_shutdown': 300,

'gpus': None, 'gpu_type': None, 'test': None}

Creating annex token...

..done.

Found 50000 annex jobs matching 'TargetAnnexName == "path12".

No sif files found, continuing...

This will (as the default project) request 1 CPUs for 1.00 hours for an

annex named 'path12' from the queue named 'cpu' on the system named 'PATh

Facility'. To change the project, use --project. To change the resources

requested, use either --nodes or one or more of --cpus and --mem_mb. To

change how long the resources are reqested for, use --lifetime (in seconds).

This command will access the system named 'PATh Facility' via SSH. To

proceed, follow the prompts from that system below; to cancel, hit CTRL-C.

(You can run 'ssh -o ControlPersist="5m" -o ControlMaster="auto" -o

ControlPath="/home/fabio.andrijauskas/.hpc-annex/master-%C"

[email protected]' to use the shared connection.)

Thank you.

Making remote temporary directory...

... made remote temporary directory /home/fabio.andrijauskas/.hpcannex/scratch/remote_script.jE2VR4cC ...

Populating annex temporary directory... done.

Submitting state-tracking job...

universe = local

requirements = hpc_annex_start_time =?= undefined

executable = /usr/libexec/condor/annex/annex-local-universe.py

cron_minute = */5

on_exit_remove = PeriodicRemove =?= true

periodic_remove = hpc_annex_start_time + 3600 + 3600 < time()

environment = PYTHONPATH=

MY.arguments = strcat( "$(CLUSTER).0 hpc_annex_request_id ", GlobalJobID,

" ap40.uw.osg-htc.org:9618?sock=ap_collector")

jobbatchname = path12 [HPC Annex]

MY.hpc_annex_request_id = GlobalJobID

MY.hpc_annex_name = "path12"

MY.hpc_annex_queue_name = "cpu"

MY.hpc_annex_collector = "ap40.uw.osg-htc.org:9618?sock=ap_collector"

MY.hpc_annex_lifetime = "3600"

MY.hpc_annex_owners = "fabio.andrijauskas"

MY.hpc_annex_nodes = "1"

MY.hpc_annex_cpus = "1"

MY.hpc_annex_mem_mb = undefined

MY.hpc_annex_allocation = undefined

MY.hpc_annex_remote_script_dir = "/home/fabio.andrijauskas/.hpcannex/scratch/remote_script.jE2VR4cC"

Caught exception while running in verbose mode:

Traceback (most recent call last):

File "/usr/lib64/python3.6/site-packages/htcondor_cli/annex_create.py",

line 872, in annex_inner_func

submit_result = schedd.submit(submit_description)

File "/usr/lib64/python3.6/site-packages/htcondor/_lock.py", line 70, in

wrapper

rv = func(*args, **kwargs)

htcondor.HTCondorIOError: Failed to create new proc ID.

During handling of the above exception, another exception occurred:

Traceback (most recent call last):

File "/usr/lib64/python3.6/site-packages/htcondor_cli/cli.py", line 131,

in main

verb_cls(logger=verb_logger, **options)

File "/usr/lib64/python3.6/site-packages/htcondor_cli/annex.py", line 137,

in __init__

annex_create(logger, **options)

File "/usr/lib64/python3.6/site-packages/htcondor_cli/annex_create.py",

line 985, in annex_create

return annex_inner_func(logger, annex_name, **others)

File "/usr/lib64/python3.6/site-packages/htcondor_cli/annex_create.py",

line 874, in annex_inner_func

raise RuntimeError(f"Failed to submit state-tracking job, aborting.")

RuntimeError: Failed to submit state-tracking job, aborting.

Error while trying to run annex create:

Failed to submit state-tracking job, aborting.

Cleaning up remote temporary directory...

Figure 4 shows the output of the Annex Creation, where an action about a 2-step authentication is requested.

#include <arpa/inet.h>

htcondor -v annex create path15 cpu@path-facility --login-name

fabio.andrijauskas --cpus 1

Attempting to run annex create with options {'annex_name': 'path15',

'queue_at_system': 'cpu@path-facility', 'nodes': 1, 'lifetime': 3600,

'allocation': None, 'owners': 'fabio.andrijauskas', 'collector':

'ap40.uw.osg-htc.org:9618?sock=ap_collector', 'token_file': None,

'password_file': PosixPath('~/.condor/annex_password_file'), 'control_path':

PosixPath('~/.hpc-annex'), 'cpus': 1, 'mem_mb': None, 'login_name':

'fabio.andrijauskas', 'login_host': None, 'startd_noclaim_shutdown': 300,

'gpus': None, 'gpu_type': None, 'test': None}

Creating annex token...

..done.

Found 1 annex jobs matching 'TargetAnnexName == "path15".

No sif files found, continuing...

This will (as the default project) request 1 CPUs for 1.00 hours for an annex

named 'path15' from the queue named 'cpu' on the system named 'PATh Facility'.

To change the project, use --project. To change the resources requested, use

either --nodes or one or more of --cpus and --mem_mb. To change how long the

resources are reqested for, use --lifetime (in seconds).

This command will access the system named 'PATh Facility' via SSH. To proceed,

follow the prompts from that system below; to cancel, hit CTRL-C.

(You can run 'ssh -o ControlPersist="5m" -o ControlMaster="auto" -o

ControlPath="/home/fabio.andrijauskas/.hpc-annex/master-%C"

[email protected]' to use the shared connection.)

Authenticate at

-----------------

https://cilogon.org/device/?user_code=R3D-CP4-LC4

-----------------

Hit enter when the website tells you to return to your device